MADDM

Distributed Dexterous Manipulation with Spatially Conditioned Multi-Agent Transformers

Methodology

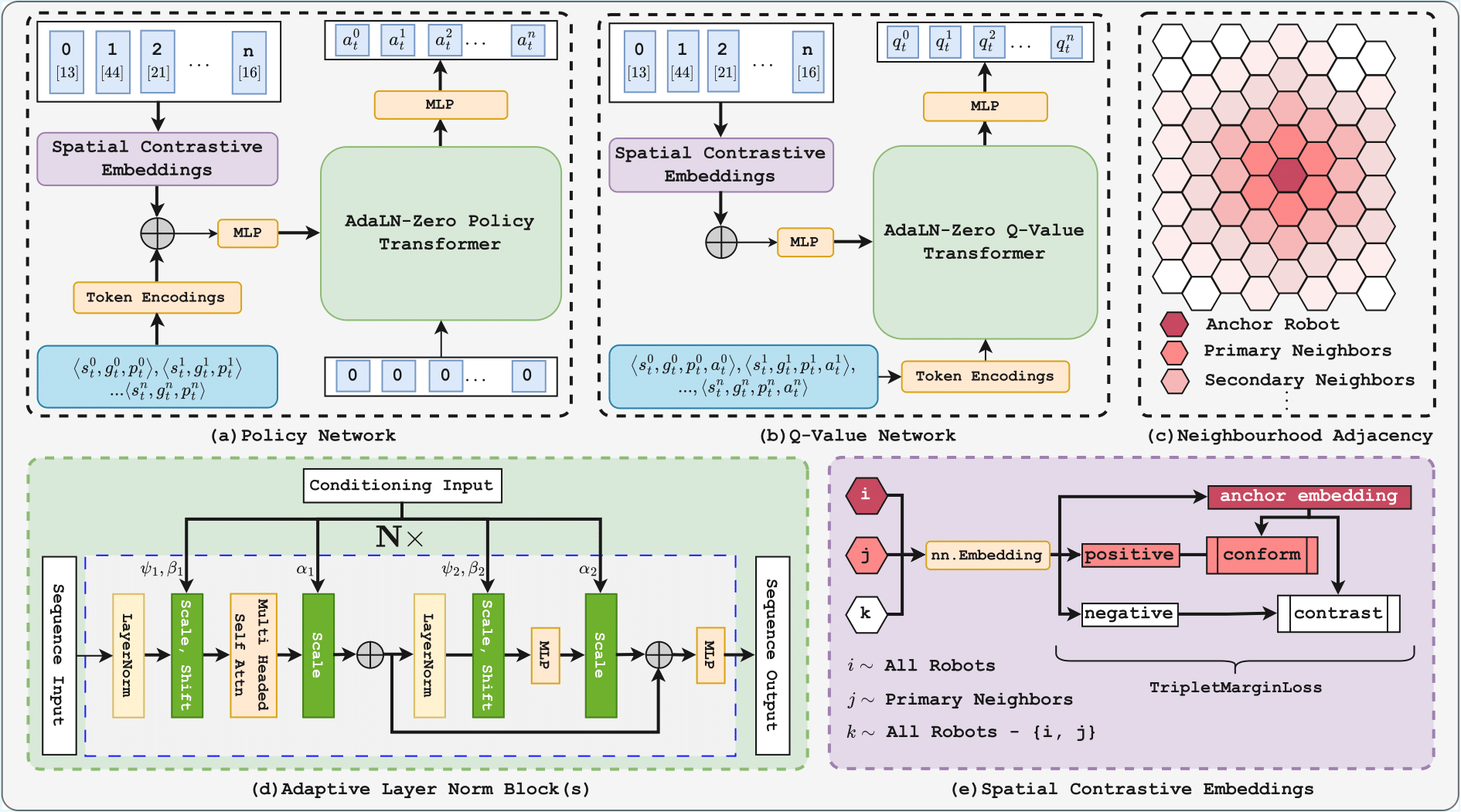

Controlling 64 robots simultaneously requires a policy that can reason about local cooperation and global task goals. Our framework consists of three core contributions: a Spatially Conditioned Multi-Agent Transformer architecture, a Spatial Contrastive Embedding mechanism, and a multi-stage learning pipeline focused on efficient robot utilization.

1. Spatially Conditioned Transformers

Standard position embeddings (like RoPE or Sinusoidal) fail to capture the physical topology of a hexagonal robot grid. We introduce Spatial Contrastive Embeddings (SCEs). These are trained offline using a triplet loss function to ensure that physically neighboring robots have similar embeddings, while distant robots have contrasting ones. This allows the transformer to "understand" the spatial layout of the array implicitly.

2. Two-Phase Learning Pipeline (MATBC-FT)

The action space is highly redundant (192-DoF). To navigate this, we employ a two-phase strategy:

- Phase 1 (MATBC): We pre-train the policy using Behavior Cloning (BC) on demonstrations collected via a visual servoing expert.

- Phase 2 (Fine-Tuning): We fine-tune the policy using Soft Actor-Critic (SAC). This allows the system to adapt to contact dynamics that the kinematic expert cannot model.

We utilize Adaptive Layer Norm (AdaLN-Zero) within the transformer blocks. This reduces training wall-clock time by ~50% compared to standard Self/Cross-Attention blocks while improving convergence.

The System: Distributed Dexterous Manipulation

The hardware consists of an $8 \times 8$ grid of compliant 3-DoF delta robots. The goal is to manipulate objects on the XY plane. The system must decide which robots to activate and how to move them to transport objects to target poses.

Results & Analysis

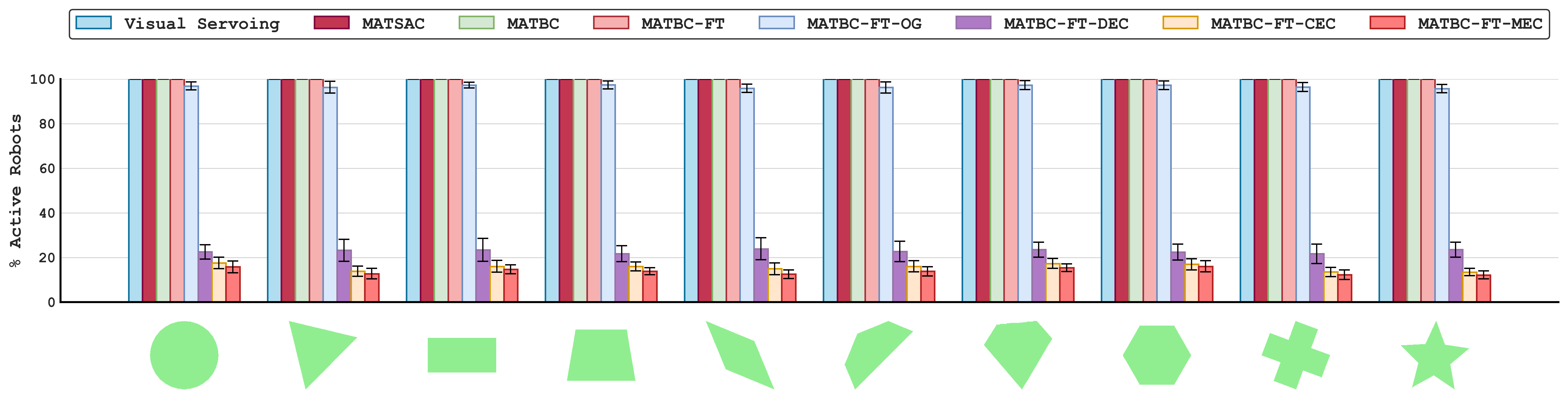

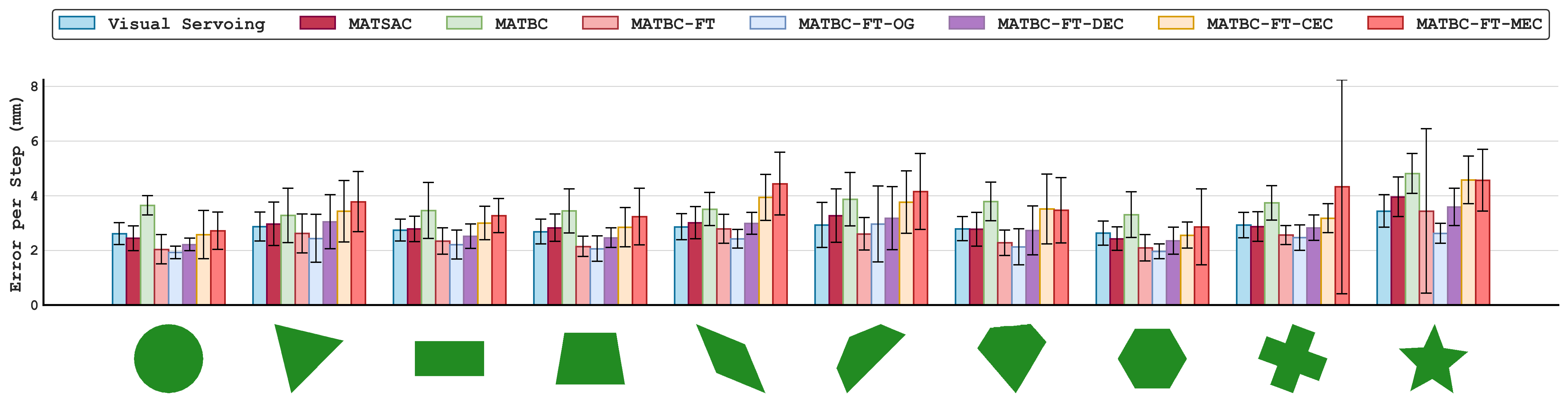

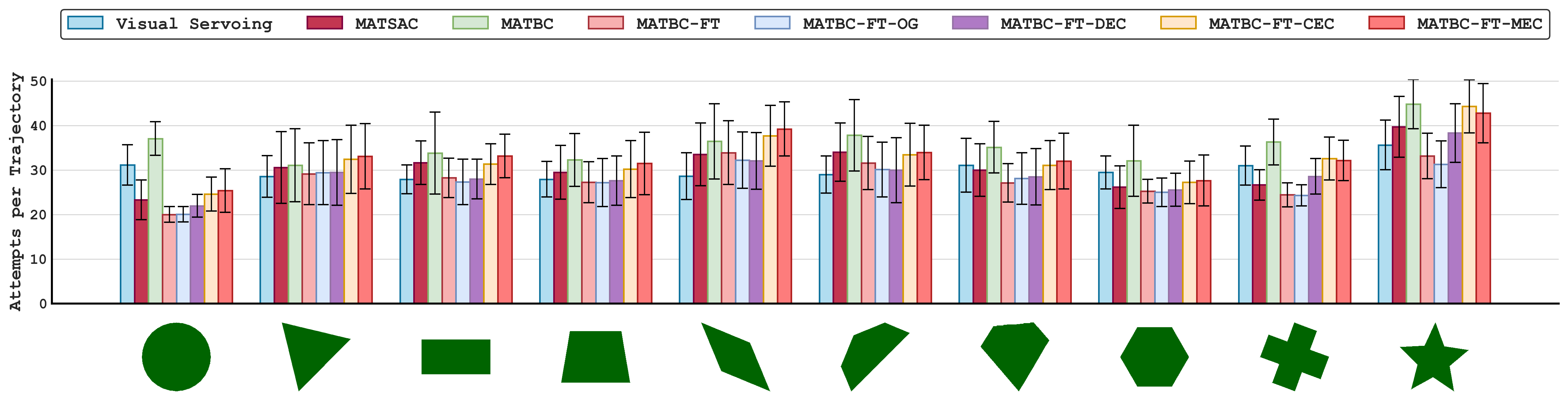

We evaluated our method against Visual Servoing and standard RL baselines. A key focus was Action Selection: minimizing the number of active robots to reduce wear and energy without sacrificing accuracy. We compared four reward shaping strategies: Original (OG), Discrete Execution Cost (DEC), Continuous Execution Cost (CEC), and Merged (MEC).

Efficiency vs. Performance Trade-off

MATBC-FT-DEC (Purple Bar) emerges as the optimal strategy. It maintains a low tracking error comparable to full-activation methods but uses ~65% fewer robots. This significantly reduces physical wear and prevents entanglement of the compliant delta linkages.

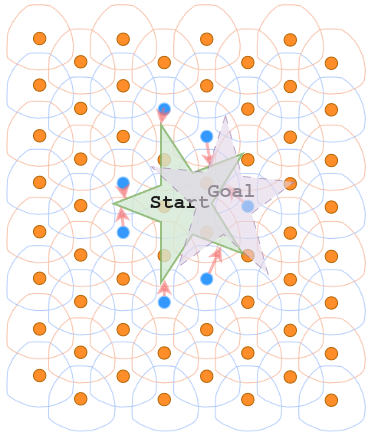

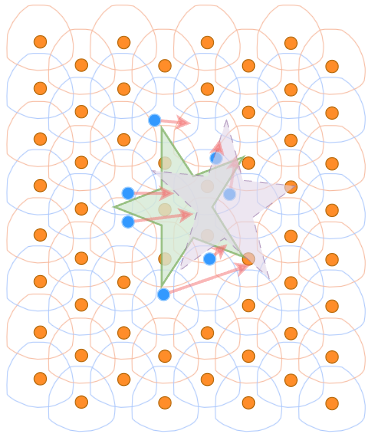

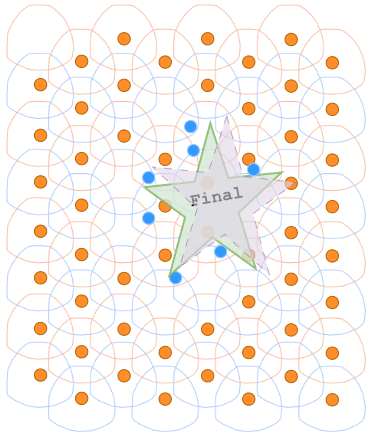

Trajectory Tracking

Conclusion

This work demonstrates that Spatially Conditioned Multi-Agent Transformers are a powerful tool for controlling large-scale distributed robotic systems. By explicitly encoding spatial relationships and fine-tuning with execution-aware costs, we can achieve high-precision dexterous manipulation that is both sample-efficient and hardware-friendly.